NVIDIA is a global leader in accelerated computing, pioneering GPU technology that powers today’s most demanding workloads across AI, data science, visualization, and high-performance computing. From data centers to edge systems, NVIDIA designs hardware and software platforms that redefine performance, scalability, and energy efficiency. Their solutions enable organizations worldwide to push the boundaries of innovation in industries like research, healthcare, finance, manufacturing, and autonomous systems.

Accelerated Computing for Modern Workloads

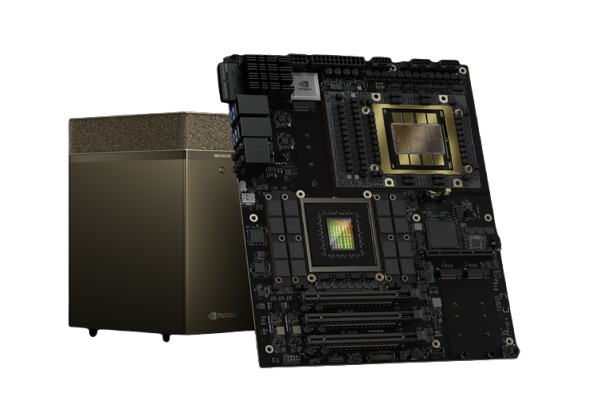

NVIDIA DGX systems are purpose-built for AI, HPC, and data-intensive workloads, delivering unmatched performance for compute, virtualization, visualization, and networking. Designed for enterprise scalability, DGX enables teams to train complex AI models, accelerate simulations, and process massive datasets with superior efficiency. Each system is optimized for maximum throughput and resource utilization, empowering organizations to unlock new levels of innovation.

Engineered for AI Performance and Flexibility

From individual DGX servers and workstations to fully integrated DGX POD environments, NVIDIA DGX solutions combine high-bandwidth networking, advanced GPU architecture, and enterprise-grade management tools. The result is a robust, scalable platform that accelerates AI development, streamlines operations, and ensures peak performance across hybrid or on-premises infrastructures.

Unlock Your AI Potential with Nextron

Ready to elevate your data center with NVIDIA DGX technology? Nextron is a certified NVIDIA Elite Partner can offer the full range of NIVIDA’s professional products. Our experts can guide you in selecting, configuring, and deploying the right DGX solution for your business in the following areas